In Isaac Asimov’s short story “Runaround,” two scientists on Mercury discover they are running out of fuel for the human base. They send a robot named Speedy on a dangerous mission to collect more, but five hours later, they find Speedy running in circles and reciting nonsense.

It turns out Speedy is having a moral crisis: he is required to obey human orders, but he’s also programmed not to cause himself harm. “It strikes an equilibrium,” one of the scientists observes. “Rule three drives him back and rule two drives him forward.”

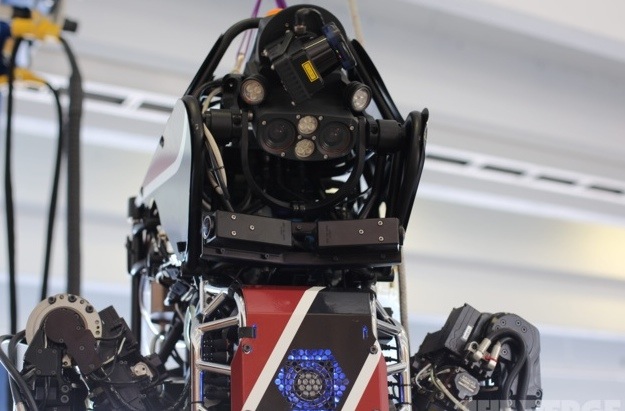

AS ROBOTS FILTER OUT INTO THE REAL WORLD, MORAL SYSTEMS BECOME MORE IMPORTANT

Asimov’s story was set in 2015, which was a little premature. But home-helper robots are a few years off, military robots are imminent, and self-driving cars are already here. We’re about to see the first generation of robots working alongside humans in the real world, where they will be faced with moral conflicts. Before long, a self-driving car will find itself in the same scenario often posed in ethics classrooms as the “trolley” hypothetical — is it better to do nothing and let five people die, or do something and kill one? click here for complete story